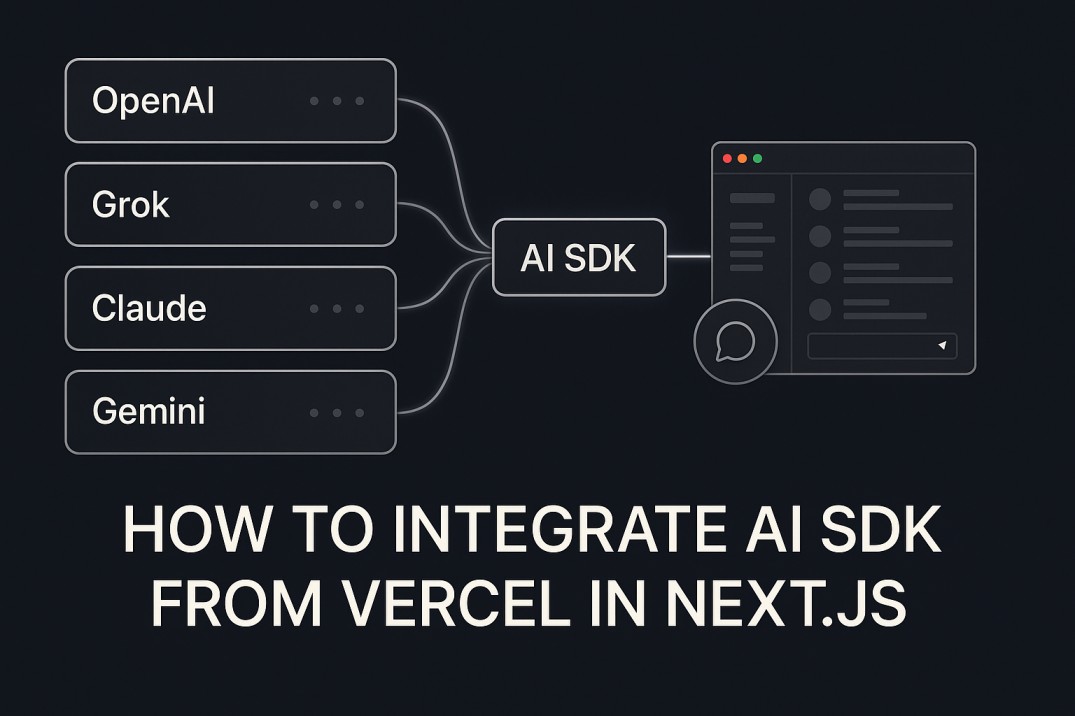

How to Integrate AI SDK from Vercel in Next js

So recently I started exploring the AI SDK from Vercel, and honestly, it’s a game changer if you’re building AI-powered apps using Next.js. I’ve used a few SDKs before, but this one feels so natural, especially when you’re already using Next.js. It’s built around simplicity, TypeScript safety, and works smoothly with modern features like server actions, App Router, and streaming.

Let me walk you through everything step-by-step and explain it in a simple way. I’ll also show examples of how to use API routes, server actions, and how you can work with different AI models like OpenAI, Gemini, Grok, and Claude.

Why I Actually Like This SDK

When I started using AI models in projects, I always ended up writing repetitive code. Each model provider had its own API structure, its own authentication style, and it felt like a mess. The AI SDK from Vercel solves this problem beautifully. You can just import a model, call it in one common function, and that’s it. You can switch models easily too, without rewriting your logic.

It also integrates perfectly with Next.js. You can run it inside API routes, server actions, or even directly inside server components. That’s what makes it powerful and developer-friendly at the same time.

Setting Things Up

Alright, so the first thing you do is set up your Next.js app. You can create one using:

Once it’s ready, install the AI SDK packages:

The ai package is the main one. It provides the core functions like generateText, generateObject, and useChat. The @ai-sdk/openai package gives you access to OpenAI models, and @ai-sdk/react helps you build front-end chat interfaces.

After that, create a .env.local file and add your API keys:

Always remember not to expose your API keys in client-side code. Keep all API calls on the server.

Creating an API Route for AI Calls

Let’s start simple. Suppose you want to send a prompt to an AI model and get a response back. You can create an API route inside app/api/chat/route.ts.

Here’s an example:

In this example, when you hit the /api/chat endpoint with a POST request and send a prompt, it will use the OpenAI model and return the generated text as JSON.

You can test this easily using fetch or Postman. This is also where you can experiment with different models later.

Using AI SDK in Server Actions

If you’re using the App Router and modern Next.js setup, you can skip API routes and just use server actions. This is a cleaner approach for internal AI calls.

Here’s how that might look:

Here everything runs on the server side, so you don’t need an API endpoint. It feels nice and direct.

Making a Chat Interface with useChat

Now if you want to build a proper chat interface that streams messages in real-time, you can use the useChat hook from @ai-sdk/react.

Here’s a small example:

This hook automatically handles sending prompts, receiving streamed responses, and updating UI state. You can customize the UI however you want, but the core logic is already handled by the SDK.

Structured Outputs with Schemas

Sometimes we don’t want plain text. For example, you may want a structured JSON output. That’s where generateObject comes in handy. You can define a schema using Zod and get a clean, validated object back.

Here’s an example:

This is super useful when you need predictable results like structured data for recipes, products, summaries, or blog outlines.

Switching Between Different AI Models

One of my favorite parts of this SDK is that you can easily switch between AI providers. For example, you can use OpenAI, Gemini, Claude, or even Grok (by xAI). The best part is you don’t have to rewrite your logic.

Here’s how that might look:

It’s the same function, same structure. Just the model provider changes. That’s the flexibility I really love about this SDK.

Using Tools and Agents

This one’s a bit advanced, but if you want your AI to do real tasks like calling APIs or fetching weather data, you can define tools. Tools basically let your AI call real functions.

Here’s a small example:

The AI can understand when to call this function based on the user prompt. It’s pretty cool when you start experimenting with real-world integrations.

Some Tips from My Experience

- Always keep your API keys safe and on the server side.

- Use streaming for faster user feedback.

- Use Zod schemas for predictable and validated outputs.

- Try different models and see which one fits your use case best.

- If cost or speed matters, you can easily switch between models.

- For complex actions, combine tool calling and structured outputs.

Folder Structure Example

Here’s a quick look at how your folder structure might look:

route.ts handles backend logic.

chat-client.tsx is your front-end chat interface.

page.tsx just renders the chat UI and manages layout.

It’s that simple.

Final Thoughts

The AI SDK from Vercel makes it so easy to integrate AI features into any Next.js app. You can go from zero to working chatbot or content generator in minutes. What I personally liked most is how consistent the SDK feels no matter which AI model you use. Whether it’s OpenAI, Gemini, Claude, or Grok, the code remains mostly the same.

If you’re serious about building AI-driven apps, I’d say spend some time with this SDK. It’s built to handle complex stuff like streaming, structured output, and tool calling, but at the same time, it keeps things simple enough to get started instantly.

If you want to dive deeper and explore all the features, check out their official documentation.